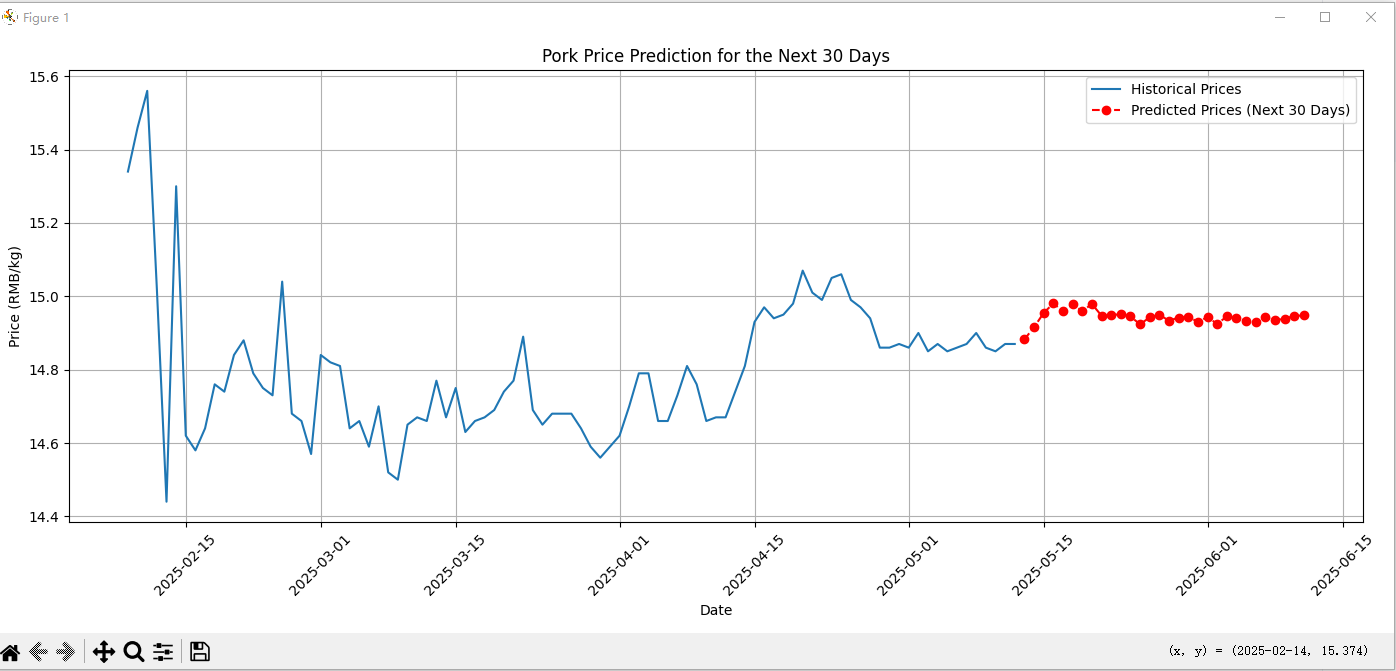

5月14日,写一下猪肉预测价格的作业

CNN+LSTM+GRU

时间序列模型

作业的两大挑战

特征处理(Out-Of-Distribution)

模型构建(Nosiy-Labels)

爬取数据集

数据集

| Date | Price |

|---|---|

| 2025/2/9 | 15.34 |

| 2025/2/10 | 15.46 |

| 2025/2/11 | 15.56 |

| 2025/2/12 | 15.02 |

| 2025/2/13 | 14.44 |

| 2025/2/14 | 15.3 |

| 2025/2/15 | 14.62 |

| 2025/2/16 | 14.58 |

| 2025/2/17 | 14.64 |

| 2025/2/18 | 14.76 |

| 2025/2/19 | 14.74 |

| 2025/2/20 | 14.84 |

| 2025/2/21 | 14.88 |

| 2025/2/22 | 14.79 |

| 2025/2/23 | 14.75 |

| 2025/2/24 | 14.73 |

| 2025/2/25 | 15.04 |

| 2025/2/26 | 14.68 |

| 2025/2/27 | 14.66 |

| 2025/2/28 | 14.57 |

| 2025/3/1 | 14.84 |

| 2025/3/2 | 14.82 |

| 2025/3/3 | 14.81 |

| 2025/3/4 | 14.64 |

| 2025/3/5 | 14.66 |

| 2025/3/6 | 14.59 |

| 2025/3/7 | 14.7 |

| 2025/3/8 | 14.52 |

| 2025/3/9 | 14.5 |

| 2025/3/10 | 14.65 |

| 2025/3/11 | 14.67 |

| 2025/3/12 | 14.66 |

| 2025/3/13 | 14.77 |

| 2025/3/14 | 14.67 |

| 2025/3/15 | 14.75 |

| 2025/3/16 | 14.63 |

| 2025/3/17 | 14.66 |

| 2025/3/18 | 14.67 |

| 2025/3/19 | 14.69 |

| 2025/3/20 | 14.74 |

| 2025/3/21 | 14.77 |

| 2025/3/22 | 14.89 |

| 2025/3/23 | 14.69 |

| 2025/3/24 | 14.65 |

| 2025/3/25 | 14.68 |

| 2025/3/26 | 14.68 |

| 2025/3/27 | 14.68 |

| 2025/3/28 | 14.64 |

| 2025/3/29 | 14.59 |

| 2025/3/30 | 14.56 |

| 2025/3/31 | 14.59 |

| 2025/4/1 | 14.62 |

| 2025/4/2 | 14.7 |

| 2025/4/3 | 14.79 |

| 2025/4/4 | 14.79 |

| 2025/4/5 | 14.66 |

| 2025/4/6 | 14.66 |

| 2025/4/7 | 14.73 |

| 2025/4/8 | 14.81 |

| 2025/4/9 | 14.76 |

| 2025/4/10 | 14.66 |

| 2025/4/11 | 14.67 |

| 2025/4/12 | 14.67 |

| 2025/4/13 | 14.74 |

| 2025/4/14 | 14.81 |

| 2025/4/15 | 14.93 |

| 2025/4/16 | 14.97 |

| 2025/4/17 | 14.94 |

| 2025/4/18 | 14.95 |

| 2025/4/19 | 14.98 |

| 2025/4/20 | 15.07 |

| 2025/4/21 | 15.01 |

| 2025/4/22 | 14.99 |

| 2025/4/23 | 15.05 |

| 2025/4/24 | 15.06 |

| 2025/4/25 | 14.99 |

| 2025/4/26 | 14.97 |

| 2025/4/27 | 14.94 |

| 2025/4/28 | 14.86 |

| 2025/4/29 | 14.86 |

| 2025/4/30 | 14.87 |

| 2025/5/1 | 14.86 |

| 2025/5/2 | 14.9 |

| 2025/5/3 | 14.85 |

| 2025/5/4 | 14.87 |

| 2025/5/5 | 14.85 |

| 2025/5/6 | 14.86 |

| 2025/5/7 | 14.87 |

| 2025/5/8 | 14.9 |

| 2025/5/9 | 14.86 |

| 2025/5/10 | 14.85 |

| 2025/5/11 | 14.87 |

| 2025/5/12 | 14.87 |

代码预测

导入第三方库

import numpy as np |

设置matplotlib的中文支持

# 设置支持中文的字体 |

注意力机制

class Attention(nn.Module): |

特征处理

# 提取时间特征 |

创建训练数据集和测试数据集

# 定义数据集类 |

定义模型

# 定义多层LSTM-GRU混合模型 |

定义超参数

# 设置超参数 |

模型训练

# 训练模型 |

可视化

plt.figure(figsize=(14, 5)) |

这是预测未来的猪肉价格

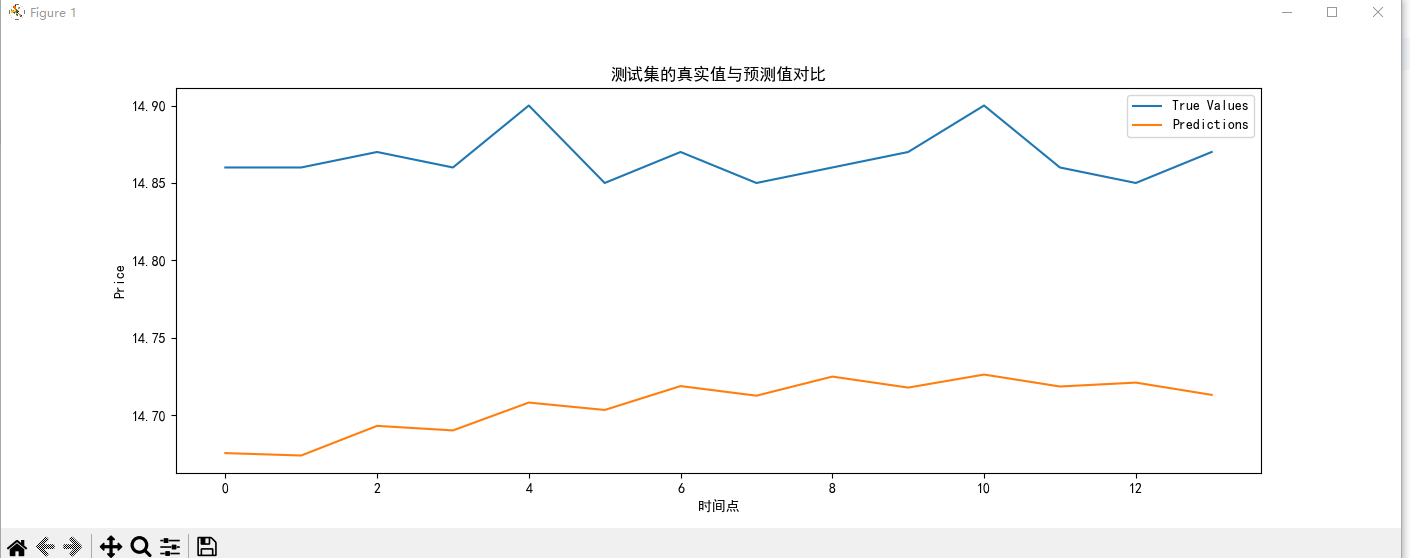

测试集可视化

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来源 Whz's Note!

评论

LivereWaline